Contents

- 1 What Does “Crawlable” Actually Mean?

- 2 What About Indexability?

- 3 Why This Matters More Than You Think

- 4 The Roadblocks That Kill Crawlability

- 5 What Kills Indexability

- 6 How to Check If Your Pages Are Actually Indexed

- 7 The Step-by-Step Fix Guide

- 8 Common Mistakes That Sabotage Your Efforts

- 9 Tools That Make Your Life Easier

- 10 Advanced Tips for Power Users

- 11 Troubleshooting Common Problems

- 12 The Mobile-First Reality

- 13 Measuring Your Success

- 14 Frequently Asked Questions

- 14.1 1. How long does it take for Google to index new pages?

- 14.2 2. Should I submit every page to Google Search Console?

- 14.3 3. Can I have too many pages indexed?

- 14.4 4. What’s the difference between crawl budget and indexing?

- 14.5 5. Do I need to worry about JavaScript and SEO?

- 14.6 6. How often should I check my indexing status?

- 15 Your Action Plan for Better Crawlability and Indexability

- 16 Conclusion

You’ve spent weeks building the perfect website. Your content is amazing, your design is sleek, and you’re ready to watch visitors pour in from Google. But then… nothing happens.

Your site isn’t showing up in search results, and you’re wondering what went wrong.

Here’s the thing most website owners don’t realize: Google needs to be able to find and understand your website before it can show it to searchers. It’s like opening a store in a hidden alley without putting up any signs – people can’t visit if they don’t know you exist.

This is where crawlability and indexability come into play. Think of them as the foundation of your entire SEO strategy. Without them, all your other SEO efforts are basically worthless. One of the key tools that supports this process is your XML sitemap—learn more in our guide on What is an XML Sitemap and Why is it Important for your Website.

Let me walk you through everything you need to know about making your website visible to Google, in plain English.

What Does “Crawlable” Actually Mean?

When we say a website is “crawlable,” we’re talking about how easily Google’s robots can discover and access your web pages.

Google doesn’t have humans sitting around manually checking every website on the internet. Instead, it uses automated programs called crawlers (or bots, or spiders – they all mean the same thing). These little digital workers constantly browse the web, following links from page to page like breadcrumbs.

Here’s how it works: Google’s crawler starts on a page it already knows about, then follows every link on that page to discover new pages. It’s like having a really dedicated intern who clicks on every single link they find, taking notes along the way.

If your page isn’t linked from somewhere else, or if there are technical barriers blocking the crawler, your page becomes invisible to Google. It’s there, but Google simply can’t find it.

Real-World Example

Imagine you run a local bakery website. You have a beautiful homepage, an “About Us” page, and a secret menu page that’s only accessible if someone types the exact URL. That secret menu page? It’s not crawlable because there are no links pointing to it from your other pages. Google’s crawler will never stumble upon it naturally.

What About Indexability?

Indexability is the next step in the process. Once Google finds your page (crawling), it needs to decide whether to add it to its massive database of web pages (indexing).

Think of Google’s index as a giant library catalog. When Google indexes your page, it’s essentially saying, “This page is worth remembering and potentially showing to people who search for relevant topics.”

The indexing process involves Google analyzing your page content, understanding what it’s about, and filing it away so it can be retrieved when someone searches for related terms.

But here’s the catch: not every page that gets crawled will get indexed. Google might choose to skip pages that are low-quality, duplicate, or marked as “not for indexing.”

Why This Matters More Than You Think

Let’s be brutally honest here: if your pages aren’t crawled and indexed, your SEO efforts are pointless.

You could have the most perfectly optimized title tags, the best keyword research, and content that would make Shakespeare weep with joy. But if Google can’t find and index your pages, none of that matters.

It’s like preparing the most amazing speech but never getting invited on stage to deliver it.

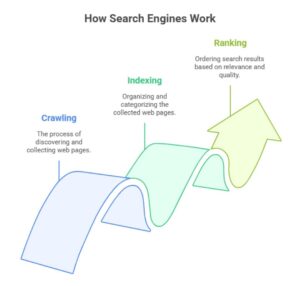

Here’s the process in simple terms:

- Google finds your page (crawling)

- Google adds it to its database (indexing)

- Google shows it in search results (ranking)

Miss step one or two, and step three never happens. No search rankings means no organic traffic. No organic traffic means no customers finding your business through Google.

The Roadblocks That Kill Crawlability

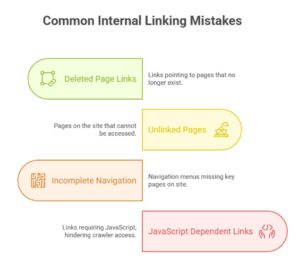

Broken Internal Linking

Your internal links are like hallways connecting different rooms in your house. If these hallways are blocked or lead nowhere, visitors (and Google’s crawlers) get lost.

Common internal linking mistakes include:

- Links that point to deleted pages

- Pages that aren’t linked from anywhere else on your site

- Navigation menus that don’t include important pages

- Links that require JavaScript to work (crawlers struggle with these)

Quick Fix: Do a monthly check of your internal links. Most website platforms have plugins or tools that can automatically detect broken links.

Robots.txt Gone Wrong

Your robots.txt file is like a bouncer at a club – it decides who gets in and who doesn’t. But sometimes this bouncer gets a little overzealous and blocks important pages by mistake.

I’ve seen websites accidentally block their entire blog section or product pages because someone made a small typo in the robots.txt file. It’s like putting a “Do Not Enter” sign on your front door and wondering why no customers are coming in.

What to check:

- Make sure you’re not blocking important pages

- Don’t block your CSS or JavaScript files (Google needs these to understand your pages properly)

- Test your robots.txt file using Google Search Console

Server Problems

If your website is slow or frequently down, Google’s crawlers might give up trying to access it. Imagine calling a business and getting a busy signal every time – eventually, you’d stop trying.

Server issues that hurt crawlability:

- Slow loading times (over 3 seconds)

- Frequent downtime

- Server errors (500 errors, etc.)

- Too many redirects that create loops

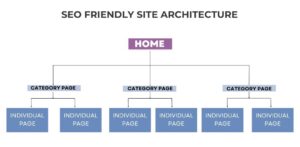

Poor Site Structure

A confusing website structure is like a maze without a map. Even if Google can technically access your pages, it might not understand how they relate to each other.

Good site structure looks like this:

- Homepage connects to main category pages

- Category pages connect to relevant sub-pages

- Every page is reachable within 3-4 clicks from the homepage

- Related pages link to each other

What Kills Indexability

Thin or Duplicate Content

Google doesn’t want to fill its index with junk. If your pages have very little content or copy content from other sources, Google might decide they’re not worth indexing.

Examples of thin content:

- Product pages with only a title and price

- Blog posts under 300 words with no real value

- Pages that are mostly images with little text

Technical Tags Gone Wrong

Sometimes websites accidentally tell Google “don’t index this page” when they actually want it indexed. This happens through:

- Noindex tags: These explicitly tell Google not to index a page

- Canonical tags: When used incorrectly, these can confuse Google about which version of a page to index

- Meta robots tags: These can block indexing if set up wrong

Low-Quality Content

Google is picky about what deserves a spot in its index. Content that’s poorly written, provides no value to users, or is stuffed with keywords might get crawled but not indexed.

How to Check If Your Pages Are Actually Indexed

Before you can fix problems, you need to know if they exist. Here are simple ways to check your indexing status:

The Site: Search Trick

Go to Google and type: site:yourwebsite.com

This shows you how many of your pages Google has indexed. If you have 50 pages on your site but Google only shows 10 in this search, you’ve got indexing issues.

Google Search Console

This free tool from Google is like getting a report card for your website. It tells you:

- How many pages Google has indexed

- Which pages couldn’t be indexed and why

- Any crawling errors Google encountered

Manual Checking

For important pages, search for their exact titles in Google. If they don’t show up, they’re probably not indexed.

The Step-by-Step Fix Guide

Step 1: Create a Logical Site Structure

Start with your homepage as the foundation. It should link to your main category pages, which then link to specific content pages.

Draw this out on paper if it helps. Your site structure should look like a family tree, not a tangled mess of random connections.

Step 2: Build Your XML Sitemap

Think of your XML sitemap as a restaurant menu for Google – it lists all the pages you want crawled and indexed.

Most website platforms can generate this automatically, but you need to make sure it includes:

- All your important pages

- Recent updates to existing pages

- Proper priority levels for different page types

Once you have your sitemap, submit it through Google Search Console.

Step 3: Fix Your Internal Linking

Every page on your site should be linked from at least one other page. Here’s how to audit this:

- Use a tool like Screaming Frog or your website’s SEO plugin to find orphaned pages

- Create contextual links within your content to connect related pages

- Update your navigation menu to include important pages

- Add footer links to key pages

Step 4: Optimize Your Content

Each page should have:

- At least 300 words of unique, valuable content

- A clear purpose and topic

- Proper headings (H1, H2, H3) that organize the information

- Internal links to related pages

Don’t just stuff keywords everywhere – write for humans first, search engines second.

Step 5: Monitor and Maintain

Set up Google Search Console and check it monthly for:

- New crawling errors

- Pages that have dropped out of the index

- Performance changes

Common Mistakes That Sabotage Your Efforts

Blocking CSS and JavaScript

Some people think blocking these files in robots.txt helps their crawl budget. It doesn’t – it actually hurts because Google needs these files to properly render your pages.

Forgetting About Mobile

Google primarily uses the mobile version of your site for indexing. If your mobile site has problems or missing content, your indexing will suffer.

Ignoring Page Speed

Slow pages frustrate both users and crawlers. If your pages take forever to load, Google’s crawlers might timeout before they can fully index your content.

Over-Optimizing

Trying too hard to optimize every single page can backfire. Focus on creating genuinely helpful content rather than trying to game the system.

Tools That Make Your Life Easier

Free Tools

Google Search Console: Your best friend for monitoring crawling and indexing issues. It’s free and comes straight from Google.

Google PageSpeed Insights: Helps identify technical issues that might be slowing down your crawlers.

Robots.txt Tester: Built into Google Search Console, helps you verify your robots.txt file isn’t blocking important pages.

Paid Tools

Screaming Frog SEO Spider: Great for auditing your entire site structure and finding technical issues.

Ahrefs Site Audit: Comprehensive tool that identifies crawling and indexing problems along with other SEO issues.

SEMrush Site Audit: Another solid option for finding technical problems that affect crawlability.

Advanced Tips for Power Users

Understanding Crawl Budget

Google doesn’t crawl every page on your site every day. It allocates a “crawl budget” based on your site’s size, update frequency, and authority.

To make the most of your crawl budget:

- Fix redirect chains and loops

- Remove or noindex low-value pages

- Keep your site fast and responsive

- Update your content regularly

Using Log File Analysis

Your server logs show exactly how Google’s crawlers interact with your site. This advanced technique can reveal:

- Which pages Google crawls most often

- Crawling errors that don’t show up elsewhere

- Opportunities to optimize your crawl budget

Structured Data Implementation

Adding structured data (schema markup) helps Google understand your content better, which can improve indexing and lead to rich snippets in search results.

Troubleshooting Common Problems

“My New Pages Aren’t Getting Indexed”

Possible causes:

- Pages aren’t linked from other pages on your site

- Content is too thin or low-quality

- Technical issues are blocking crawlers

- Your site has low authority and Google isn’t crawling frequently

Solutions:

- Add internal links to new pages

- Improve content quality and length

- Submit URLs manually through Google Search Console

- Share new content on social media to generate external signals

“My Pages Disappeared from Google”

Possible causes:

- Technical changes accidentally blocked crawlers

- Content was flagged as duplicate or low-quality

- Server issues prevented crawling

- Manual penalty from Google

Solutions:

- Check Google Search Console for error messages

- Review recent changes to your website

- Ensure your content is unique and valuable

- Fix any technical issues immediately

“Google is Only Indexing Some of My Pages”

Possible causes:

- Internal linking issues

- Content quality varies across pages

- Some pages are accidentally set to noindex

- Crawl budget limitations

Solutions:

- Improve internal linking structure

- Audit content quality across all pages

- Check for noindex tags on missing pages

- Focus on your most important pages first

The Mobile-First Reality

Since 2018, Google primarily uses the mobile version of your website for indexing. This means:

- Your mobile site needs to have the same content as your desktop site

- Navigation should work perfectly on mobile devices

- Page speed is crucial on mobile

- Structured data should be present on mobile pages

Measuring Your Success

Key Metrics to Track

Indexed Pages: The number of your pages in Google’s index (check via Google Search Console)

Crawl Errors: Any problems Google encountered while crawling your site

Average Crawl Rate: How often Google visits your site

Page Speed: How quickly your pages load for both users and crawlers

Setting Up Monitoring

Create a simple spreadsheet to track these metrics monthly:

- Total indexed pages

- New crawl errors

- Pages with indexing issues

- Overall site health score

Frequently Asked Questions

1. How long does it take for Google to index new pages?

It varies widely, but typically:

- High-authority sites: Hours to days

- Medium-authority sites: Days to weeks

- New or low-authority sites: Weeks to months

You can speed this up by submitting URLs directly through Google Search Console and ensuring good internal linking.

2. Should I submit every page to Google Search Console?

No, focus on your most important pages. Google will naturally discover other pages through crawling if they’re properly linked.

3. Can I have too many pages indexed?

Quality matters more than quantity. It’s better to have 100 high-quality indexed pages than 1,000 thin or duplicate pages.

4. What’s the difference between crawl budget and indexing?

Crawl budget is how often Google visits your site. Indexing is whether Google chooses to add crawled pages to its database. You can be crawled but not indexed.

5. Do I need to worry about JavaScript and SEO?

Modern Google can handle JavaScript reasonably well, but it’s still best practice to ensure your important content is accessible without JavaScript when possible.

6. How often should I check my indexing status?

Monthly checks are usually sufficient unless you’re making major site changes. Then you should monitor more closely.

Your Action Plan for Better Crawlability and Indexability

Ready to get started? Here’s your week-by-week action plan:

Week 1: Assessment

- Set up Google Search Console if you haven’t already

- Check how many of your pages are indexed using the site: search

- Identify any obvious crawling errors in Google Search Console

Week 2: Quick Wins

- Fix any broken internal links

- Create or update your XML sitemap

- Submit your sitemap to Google Search Console

Week 3: Content Audit

- Review your thinnest content pages

- Either improve them or consider removing/consolidating them

- Check for duplicate content issues

Week 4: Technical Cleanup

- Review your robots.txt file

- Check for any accidental noindex tags

- Ensure your site loads quickly on mobile

Ongoing Maintenance

- Monthly Google Search Console check-ins

- Quarterly internal link audits

- Regular content updates and improvements

Conclusion

Crawlability and indexability aren’t glamorous topics, but they’re absolutely crucial for SEO success. Think of them as the plumbing of your website – when they work properly, you don’t think about them. When they break, everything else falls apart.

The good news is that most crawlability and indexability issues are fixable with some patience and attention to detail. You don’t need to be a technical expert – you just need to understand the basics and consistently apply them.

Start with the fundamentals: make sure your pages are linked properly, your content is valuable, and your technical setup isn’t blocking Google’s crawlers. Once you have these basics covered, you can move on to more advanced optimizations.

Remember, SEO is a marathon, not a sprint. Focus on building a solid foundation with good crawlability and indexability, and the rest of your SEO efforts will be much more effective.

Your website deserves to be found by the people searching for what you offer. With the right approach to crawlability and indexability, you’ll give your content the best possible chance to shine in Google’s search results.

Now stop reading and start implementing – your future search rankings will thank you for it.

Hi, I’m Mitu Chowdhary — an SEO enthusiast turned expert who genuinely enjoys helping people understand how search engines work. With this Blog, I focus on making SEO feel less overwhelming and more approachable for anyone who wants to learn — whether you’re just getting started or running a business and trying to grow online.

I write for beginners, small business owners, service providers — anyone curious about how to get found on Google and build a real online presence. My goal is to break down SEO into simple, practical steps that you can actually use, without the confusing technical jargon.

Every post I share comes from a place of wanting to help — not just to explain what SEO is, but to show you how to make it work for you. If something doesn’t make sense, I want this blog to be the place where it finally clicks.

I believe good SEO is about clarity, consistency, and a bit of creativity — and that’s exactly what I try to bring to every article here.

Thanks for stopping by. I hope you find something helpful here!