Contents

- 1 What is Robots.txt? Understanding the Fundamentals

- 2 How Robots.txt Works

- 3 Understanding the Structure & Syntax of Robots.txt File

- 4 Types of Web Crawlers & Strategic User-Agent Targeting

- 5 Strategic Uses of Robots.txt for SEO Success

- 6 What to Block vs. What NOT to Block

- 7 Robots.txt and Google’s Ranking Factors

- 8 Common Robots.txt Mistakes (With Real Examples & Fixes)

- 9 Creating Your First Robots.txt File: Step-by-Step Tutorial

- 10 Advanced Robots.txt Strategies for Different Website Types

- 11 International SEO and Robots.txt

- 12 Testing & Validation: Ensuring Perfect Implementation

- 13 Robots.txt vs. Other SEO Directives: Understanding the Ecosystem

- 14 Algorithm Updates & Robots.txt: Staying Current

- 15 Industry-Specific Implementation Examples

- 16 Troubleshooting Common Issues

- 17 Performance Impact and ROI Analysis

- 18 Advanced Debugging and Monitoring

- 19 Enterprise-Level Robots.txt Management

- 20 Future of Robots.txt and Emerging Trends

- 21 Frequently Asked Questions (FAQs)

- 21.1 1. What is robots.txt and why is it important for SEO?

- 21.2 2. Where should I place my robots.txt file?

- 21.3 3. Can robots.txt improve my search engine rankings?

- 21.4 4. What’s the difference between robots.txt and noindex meta tags?

- 21.5 5. Should I block CSS and JavaScript files in robots.txt?

- 21.6 6. How do I test my robots.txt file?

- 21.7 7. What happens if I don’t have a robots.txt file?

- 21.8 8. Can robots.txt completely block search engines from my site?

- 21.9 9. How often should I update my robots.txt file?

- 21.10 10. What are the most common robots.txt mistakes?

- 21.11 11. Do all search engines respect robots.txt?

- 21.12 12. Can robots.txt affect my Core Web Vitals scores?

- 21.13 13. How does robots.txt work with XML sitemaps?

- 21.14 14. Should e-commerce sites use robots.txt differently?

- 21.15 15. What’s the relationship between robots.txt and crawl budget?

- 21.16 16. Can I use robots.txt for international SEO?

- 21.17 17. How do I handle robots.txt for subdirectories vs. subdomains?

- 21.18 18. What should I do if my robots.txt is blocking important pages?

- 21.19 19. Can robots.txt help with duplicate content issues?

- 21.20 20. How does robots.txt affect mobile SEO?

- 22 Conclusion: Mastering Robots.txt for SEO Success

Imagine this: You’ve spent months crafting the perfect website. Your content is stellar, your design is flawless, and your products are exactly what customers need. But there’s an invisible bouncer at your website’s front door, and it’s been turning away the most important visitors you’ll ever have – search engine bots.

This digital bouncer is called robots.txt, and it’s either your SEO’s best friend or its worst enemy. The difference between these two outcomes often comes down to a single line of code that 73% of website owners get wrong, according to recent technical SEO audits.

In this comprehensive guide, you’ll discover how this seemingly simple text file can make or break your search engine rankings, and more importantly, how to use it strategically to boost your SEO performance. Whether you’re a complete beginner or looking to refine your technical SEO knowledge, this guide will transform how you think about search engine optimization.

What You’ll Learn:

- The exact mechanics of how robots.txt affects search engine crawling and indexing

- Critical mistakes that could be blocking your content from ranking

- Advanced strategies used by top-performing websites to optimize crawl budget

- Step-by-step implementation guide with real-world examples

- How robots.txt integrates with Google’s latest algorithm updates

What is Robots.txt? Understanding the Fundamentals

The Simple Definition

Robots.txt is a plain text file that lives in your website’s root directory and communicates with search engine crawlers (also called bots or spiders) about which parts of your website they can and cannot access. Think of it as a set of instructions or guidelines that you provide to search engines like Google, Bing, and others.

The Security Guard Analogy

Imagine your website is a massive office building, and search engine bots are visitors trying to enter. The robots.txt file acts like a security guard at the front desk, holding a list of instructions:

- “Googlebot, you can visit floors 1-10, but stay out of the private conference rooms on floor 11.”

- “Bingbot, you’re welcome everywhere except the maintenance areas.”

- “Social media crawlers, please only visit our public gallery on floor 2.”

This analogy perfectly captures how robots.txt works – it’s not a locked door (bots can still technically access blocked areas), but rather a polite request that most reputable crawlers respect.

How Robots.txt Works

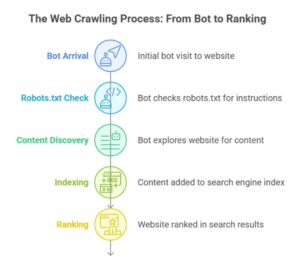

The Complete Web Crawling Journey

Understanding how bots interact with your site helps clarify why robots.txt is so powerful. Here’s the simplified version of the key stages of the full journey:

- Bot Arrival

A search engine crawler reaches your website and immediately looks for the robots.txt file. - Robots.txt Check

The bot reads the file to decide which URLs it’s allowed to crawl based on user-agent rules. - Content Discovery

If permitted, the bot follows internal links and sitemap references to explore your site’s content. - Indexing Decision

Crawled pages are evaluated for indexation based on tags, directives, and content quality. - Ranking Outcome

Indexed pages are ranked according to relevance, E-E-A-T signals, and user experience metrics.

Search Engine Mechanics Deep Dive

Googlebot Behavior: Google’s crawler checks robots.txt files approximately every 24 hours for updates. This means changes to your robots.txt file typically take effect within a day, though it can sometimes take longer for all of Google’s systems to update.

Caching Mechanisms: Search engines cache robots.txt files to avoid repeatedly requesting them during crawling sessions. This improves efficiency but means immediate changes aren’t always reflected instantly.

Error Handling: If your robots.txt file is temporarily unavailable (server error, etc.), most search engines will assume they have permission to crawl your entire site until they can successfully retrieve the file again.

Understanding the Structure & Syntax of Robots.txt File

Basic Components

A properly structured robots.txt file contains several key components, each serving a specific purpose in guiding search engine behavior:

# This is a comment - bots ignore these lines User-agent: * Disallow: /private/ Allow: /public/ Crawl-delay: 1 User-agent: Googlebot Disallow: /temp/ Allow: / Sitemap: https://yourwebsite.com/sitemap.xml

Component Breakdown:

- Comments (#) Lines beginning with # are comments and are ignored by crawlers. Use these to document your robots.txt strategy for future reference.

- User-agent Directive Specifies which crawler the following rules apply to. The asterisk (*) is a wildcard meaning “all crawlers.”

- Disallow Directive Tells the specified crawler not to access the listed directory or file.

- Allow Directive Explicitly permits access to specific areas, often used to override broader disallow rules.

- Crawl-delay Directive Requests that the crawler wait a specified number of seconds between requests (not supported by all crawlers).

- Sitemap Directive Provides the location of your XML sitemap, helping crawlers discover your content more efficiently.

Advanced Syntax Patterns

Wildcard Usage:

User-agent: * Disallow: /*.pdf$ # Blocks all PDF files Disallow: /*?category=* # Blocks URLs with category parameters Disallow: /search?* # Blocks search result pages

Multiple User-agent Groups:

User-agent: Googlebot Disallow: /admin/ Allow: /admin/public/ User-agent: Bingbot Disallow: /temp/ Crawl-delay: 2 User-agent: * Disallow: /private/

Types of Web Crawlers & Strategic User-Agent Targeting

Major Search Engine Bots

Understanding different types of crawlers helps you create more targeted robots.txt strategies:

Google Crawlers:

- Googlebot: Primary web crawler

- Googlebot-Mobile: Mobile-specific crawler

- Googlebot-Image: Image content crawler

- Googlebot-Video: Video content crawler

Other Major Search Engines:

- Bingbot: Microsoft Bing’s crawler

- Slurp: Yahoo’s crawler (now powered by Bing)

- DuckDuckBot: DuckDuckGo’s crawler

- Yandexbot: Russia’s Yandex search engine

- Baiduspider: China’s Baidu search engine

Specialized Crawlers & Tools

SEO Tool Crawlers:

- AhrefsBot: Ahrefs backlink analysis

- SemrushBot: SEMrush SEO tool crawler

- MJ12bot: Majestic SEO crawler

- DotBot: Moz’s crawler

Social Media Crawlers:

- facebookexternalhit: Facebook link previews

- Twitterbot: Twitter card information

- LinkedInBot: LinkedIn content previews

Strategic Consideration: Some websites block SEO tool crawlers to prevent competitors from analyzing their content strategies, while others allow them to maintain visibility in backlink analysis tools.

Strategic Uses of Robots.txt for SEO Success

Content Siloing and Topic Authority

Implementation Strategy: Use robots.txt to guide crawlers toward your most important content clusters while blocking access to less valuable pages. This helps search engines understand your site’s topical authority and content hierarchy.

User-agent: * # Block low-value pages Disallow: /search/ Disallow: /tag/ Disallow: /*?print=* # Allow important content clusters Allow: /seo-guide/ Allow: /digital-marketing/ Allow: /content-strategy/

Crawl Budget Optimization

Understanding Crawl Budget: Search engines allocate a specific amount of time and resources to crawling your website, known as “crawl budget.” Websites with millions of pages need to be strategic about how this budget is used.

Optimization Techniques:

- Block duplicate content variations (print versions, filtered results)

- Prevent crawling of infinite scroll or pagination loops

- Block administrative areas and private content

- Focus crawler attention on high-value content

Technical SEO Integration

Core Web Vitals Consideration: Never block CSS or JavaScript files that are essential for page rendering, as this can negatively impact Core Web Vitals scores and user experience metrics.

Mobile-First Indexing: Ensure your robots.txt directives don’t inadvertently block mobile crawlers from accessing critical resources.

What to Block vs. What NOT to Block

Safe to Block Categories

Administrative Areas:

User-agent: * Disallow: /wp-admin/ Disallow: /admin/ Disallow: /dashboard/ Disallow: /login/

Duplicate Content Patterns:

User-agent: * Disallow: /*?utm_* # UTM tracking parameters Disallow: /*?sort=* # Sorting parameters Disallow: /*?filter=* # Filter parameters Disallow: /print/ # Print versions of pages

Development and Testing Areas:

User-agent: * Disallow: /dev/ Disallow: /staging/ Disallow: /test/ Disallow: /beta/

NEVER Block These Critical Elements

CSS and JavaScript Files: Blocking these files prevents Google from properly rendering your pages, which can severely impact your Core Web Vitals scores and search rankings.

# WRONG - Don't do this! User-agent: * Disallow: /css/ Disallow: /js/ Disallow: *.css Disallow: *.js # CORRECT - Allow access to styling and scripts User-agent: * Allow: /css/ Allow: /js/

Important Landing Pages: Never block pages that you want to rank in search results, even if they’re not linked from your main navigation.

XML Sitemaps: Your sitemap should always be accessible to search engines.

Canonical Versions: Don’t block the canonical (preferred) version of your pages.

Robots.txt and Google’s Ranking Factors

E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness)

Impact on Authority Signals: Properly implemented robots.txt can enhance your site’s E-E-A-T by:

- Ensuring crawlers focus on high-quality, authoritative content

- Preventing indexation of low-quality or duplicate pages

- Maintaining a clean, professional site structure

Expert Tip: For YMYL (Your Money or Your Life) topics like health, finance, or legal advice, ensure your robots.txt doesn’t block author bio pages or credential information that establishes expertise.

Core Web Vitals Integration

Critical Relationship: Blocking CSS or JavaScript files can cause pages to render incorrectly, leading to poor Largest Contentful Paint (LCP) and Cumulative Layout Shift (CLS) scores.

Best Practice: Always test your robots.txt changes in Google Search Console to ensure they don’t negatively impact page rendering.

Page Experience Signals

User Experience Optimization: Your robots.txt strategy should support, not hinder, positive user experience signals:

- Fast loading times (don’t block essential resources)

- Mobile usability (ensure mobile crawlers have access)

- HTTPS security (don’t create mixed content issues)

Common Robots.txt Mistakes (With Real Examples & Fixes)

Mistake #1: Blocking Critical Resources

The Problem:

# WRONG - This blocks essential styling User-agent: * Disallow: /wp-content/themes/

The Fix:

# CORRECT - Allow themes but block specific sensitive files User-agent: * Allow: /wp-content/themes/ Disallow: /wp-content/themes/*/functions.php

Real-World Impact: A major e-commerce site accidentally blocked their CSS directory, causing Google to see their pages as broken and unstyled, resulting in a 40% drop in organic traffic within two weeks.

Mistake #2: Overly Broad Wildcards

The Problem:

# WRONG - This might block more than intended User-agent: * Disallow: /*admin*

The Fix:

# CORRECT - Be specific with your blocking User-agent: * Disallow: /admin/ Disallow: /wp-admin/

Mistake #3: Case Sensitivity Issues

The Problem: Robots.txt is case-sensitive, so /Admin/ and /admin/ are treated as different directories.

The Solution: Always use consistent, lowercase naming conventions and test thoroughly.

Mistake #4: Conflicting Directives

The Problem:

# CONFUSING - Contradictory rules User-agent: * Disallow: /blog/ Allow: /blog/

The Fix: Be explicit and use more specific allow rules when needed:

User-agent: * Disallow: /blog/private/ Allow: /blog/

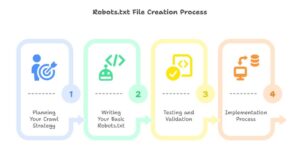

Creating Your First Robots.txt File: Step-by-Step Tutorial

Phase 1: Planning Your Crawl Strategy

Content Audit: Before creating your robots.txt file, conduct a thorough content audit:

- Identify your most important pages and content clusters

- List administrative areas and private content

- Find duplicate content patterns

- Catalog resources (CSS, JS, images) that are essential for rendering

SEO Goal Alignment: Consider your primary SEO objectives:

- Are you trying to improve crawl efficiency?

- Do you need to prevent duplicate content indexation?

- Are you protecting sensitive areas?

Phase 2: Writing Your Basic Robots.txt

Starter Template:

# Robots.txt for YourWebsite.com # Created: [Date] # Purpose: Basic SEO optimization User-agent: * # Allow access to all content by default Allow: / # Block common WordPress admin areas Disallow: /wp-admin/ Disallow: /wp-login.php # Block search and filter pages to prevent duplicate content Disallow: /search/ Disallow: /*?s=* Disallow: /*?category=* # Allow important CSS and JavaScript Allow: /wp-content/themes/ Allow: /wp-content/plugins/ # Sitemap location Sitemap: https://yourwebsite.com/sitemap.xml Sitemap: https://yourwebsite.com/news-sitemap.xml

Phase 3: Testing and Validation

Google Search Console Method:

- Log into Google Search Console

- Navigate to “Crawl” > “robots.txt Tester”

- Paste your robots.txt content

- Test specific URLs to ensure proper blocking/allowing

- Check for syntax errors

Manual Testing: Visit yourwebsite.com/robots.txt in a browser to ensure the file is accessible and displays correctly.

Phase 4: Implementation Process

File Creation:

- Create a plain text file named “robots.txt” (no file extension)

- Upload to your website’s root directory

- Ensure the file is accessible at yourdomain.com/robots.txt

- Set proper file permissions (typically 644)

WordPress-Specific Notes: Some WordPress plugins can generate robots.txt files dynamically. If you’re using such plugins, you may need to disable this feature to use a custom file.

Advanced Robots.txt Strategies for Different Website Types

E-commerce Optimization

Strategic Blocking for Online Stores:

User-agent: * # Block cart and checkout pages Disallow: /cart/ Disallow: /checkout/ Disallow: /my-account/ # Block filtered/sorted product pages to prevent duplicate content Disallow: /*?orderby=* Disallow: /*?filter_* Disallow: /*?min_price=* Disallow: /*?max_price=* # Allow product pages and categories Allow: /product/ Allow: /category/ Allow: /shop/ # Allow essential resources Allow: /wp-content/themes/ Allow: /wp-content/plugins/ Sitemap: https://yourstore.com/product-sitemap.xml Sitemap: https://yourstore.com/category-sitemap.xml

Blog and Content Sites

Content-Focused Strategy:

User-agent: * # Block tag and date archives to reduce duplicate content Disallow: /tag/ Disallow: /date/ Disallow: /author/ # Block search results and comments Disallow: /search/ Disallow: /*?s=* Disallow: /*/feed/ # Allow all blog content Allow: /blog/ Allow: /category/ Allow: / # Special consideration for news sites User-agent: Googlebot-News Allow: /news/ Allow: /blog/ Sitemap: https://yourblog.com/sitemap.xml Sitemap: https://yourblog.com/news-sitemap.xml

Local Business Websites

Location-Specific Optimization:

User-agent: * # Standard blocking Disallow: /admin/ Disallow: /private/ # Allow location pages Allow: /locations/ Allow: /services/ Allow: /contact/ # Block booking system backend Disallow: /booking-system/ Disallow: /calendar/ # Allow local business schema markup Allow: /structured-data/ Sitemap: https://localbusiness.com/sitemap.xml

International SEO and Robots.txt

Multi-Language Website Considerations

Hreflang and Robots.txt Coordination:

User-agent: * # Allow all language versions Allow: /en/ Allow: /es/ Allow: /fr/ Allow: /de/ # Block language-specific admin areas Disallow: /*/admin/ Disallow: /*/wp-admin/ # Allow language-specific sitemaps Sitemap: https://website.com/en/sitemap.xml Sitemap: https://website.com/es/sitemap.xml Sitemap: https://website.com/fr/sitemap.xml

Regional Search Engine Targeting:

# Optimize for local search engines User-agent: Googlebot Allow: / User-agent: Bingbot Allow: / Crawl-delay: 1 User-agent: Yandexbot Allow: / Crawl-delay: 2 # Block or allow region-specific crawlers as needed User-agent: Baiduspider Disallow: /

Testing & Validation: Ensuring Perfect Implementation

Google Search Console Deep Dive

Step-by-Step Testing Process:

- Access the Robots.txt Tester:

- Open Google Search Console

- Select your property

- Navigate to “Legacy tools and reports” > “robots.txt Tester”

- Test Specific URLs:

- Enter important URLs from your site

- Check if they’re allowed or blocked

- Pay special attention to:

- Homepage

- Key landing pages

- CSS/JavaScript files

- Sitemap locations

- Validation Checklist:

- [ ] File is accessible at domain.com/robots.txt

- [ ] No syntax errors reported

- [ ] Critical resources are not blocked

- [ ] Important pages are accessible

- [ ] Sitemap directive is included and accessible

Third-Party Tool Validation

Screaming Frog SEO Spider:

- Configure the crawler to respect robots.txt

- Run a full site crawl

- Review blocked URLs to ensure they align with your strategy

- Check for any unexpected blocking

Website Auditing Tools:

- SEMrush Site Audit: Identifies robots.txt issues

- Ahrefs Site Audit: Flags potential blocking problems

- DeepCrawl: Comprehensive robots.txt analysis

Regular Monitoring and Maintenance

Monthly Checks:

- Verify robots.txt file accessibility

- Review Google Search Console for crawl errors

- Monitor organic traffic for unexpected drops

- Check for new areas that might need blocking

Algorithm Update Response: When Google releases major algorithm updates, review your robots.txt strategy to ensure it aligns with new best practices.

Robots.txt vs. Other SEO Directives: Understanding the Ecosystem

Robots.txt vs. Meta Robots Tags

When to Use Each:

Robots.txt – Use for:

- Directory-level blocking

- File type restrictions

- Crawl budget optimization

- Server resource protection

Meta Robots Tags – Use for:

- Page-level control

- Preventing indexation while allowing crawling

- Specific directive implementation (nofollow, noarchive, etc.)

Example Coordination:

<!-- In HTML head - prevents indexing but allows crawling --> <meta name="robots" content="noindex, follow"> # In robots.txt - prevents crawling entirely User-agent: * Disallow: /private-section/

Integration with Canonical Tags

Complementary Strategy:

<!-- Canonical tag in HTML --> <link rel="canonical" href="https://example.com/preferred-version/"> # Robots.txt blocking parameter variations User-agent: * Disallow: /*?utm_* Disallow: /*?ref=* Allow: /preferred-page/

Strategic Coordination: Use canonical tags to indicate preferred versions while using robots.txt to prevent crawling of duplicate parameter variations.

XML Sitemaps Integration

Perfect Partnership: Your robots.txt file should include sitemap directives to help crawlers discover your content efficiently:

User-agent: * Disallow: /private/ Allow: / # Multiple sitemap types for comprehensive coverage Sitemap: https://yoursite.com/sitemap.xml Sitemap: https://yoursite.com/image-sitemap.xml Sitemap: https://yoursite.com/video-sitemap.xml Sitemap: https://yoursite.com/news-sitemap.xml

Algorithm Updates & Robots.txt: Staying Current

Historical Impact Analysis

Major Algorithm Updates Affecting Robots.txt:

Mobile-First Indexing (2018-2021):

- Impact: Sites blocking mobile user-agents saw ranking drops

- Solution: Ensure mobile crawlers have equal access to desktop crawlers

Core Web Vitals Update (2021):

- Impact: Sites blocking CSS/JS files saw decreased page experience scores

- Solution: Never block render-critical resources

Helpful Content Update (2022-2024):

- Impact: Focus shifted to ensuring crawlers can access high-quality, helpful content

- Solution: Review robots.txt to ensure it doesn’t block valuable content

Current Best Practices (2025)

Google’s Latest Recommendations:

- Resource Accessibility: Ensure CSS, JavaScript, and images necessary for page rendering are accessible

- Mobile Parity: Don’t create different rules for mobile vs. desktop crawlers

- Core Web Vitals Support: Robots.txt should support, not hinder, page experience metrics

- AI Content Discovery: With AI-powered search features, ensure important content remains crawlable

Future-Proofing Strategies:

- Regular audits every quarter

- Monitor Google’s Webmaster Guidelines updates

- Test major changes in staging environments

- Keep documentation of robots.txt changes and rationale

Industry-Specific Implementation Examples

SaaS and Technology Companies

Advanced B2B Strategy:

User-agent: * # Block trial and demo environments Disallow: /demo/ Disallow: /trial/ Disallow: /sandbox/ # Block user dashboard areas Disallow: /app/ Disallow: /dashboard/ Disallow: /account/ # Allow documentation and help content Allow: /docs/ Allow: /help/ Allow: /api/ # Allow marketing and sales pages Allow: /features/ Allow: /pricing/ Allow: /case-studies/ # Block internal tools and admin Disallow: /admin/ Disallow: /internal/ Disallow: /dev-tools/ # Special handling for API documentation User-agent: Googlebot Allow: /api/docs/ Allow: /developer/ Sitemap: https://saascompany.com/sitemap.xml Sitemap: https://saascompany.com/docs-sitemap.xml

Educational Institutions

Academic Website Optimization:

User-agent: * # Block student and faculty portals Disallow: /student-portal/ Disallow: /faculty/ Disallow: /staff/ Disallow: /login/ # Block internal systems Disallow: /lms/ Disallow: /gradebook/ Disallow: /admin/ # Allow public academic content Allow: /courses/ Allow: /programs/ Allow: /research/ Allow: /news/ Allow: /events/ # Allow library and resource pages Allow: /library/ Allow: /resources/ # Block application systems Disallow: /apply/ Disallow: /application/ Sitemap: https://university.edu/sitemap.xml Sitemap: https://university.edu/courses-sitemap.xml

Healthcare and Medical Practices

HIPAA-Compliant Strategy:

User-agent: * # Strict blocking of patient areas Disallow: /patient-portal/ Disallow: /records/ Disallow: /appointments/ Disallow: /billing/ # Block internal medical systems Disallow: /ehr/ Disallow: /staff/ Disallow: /admin/ # Allow public health information Allow: /services/ Allow: /doctors/ Allow: /health-info/ Allow: /blog/ # Allow contact and location info Allow: /contact/ Allow: /locations/ # Block scheduling systems Disallow: /schedule/ Disallow: /booking/ Sitemap: https://medicalcenter.com/sitemap.xml

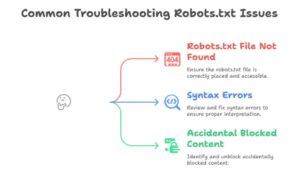

Troubleshooting Common Issues

Issue #1: Robots.txt File Not Found (404 Error)

Symptoms:

- Google Search Console reports robots.txt as inaccessible

- Crawlers default to crawling everything

- Inconsistent crawling behavior

Diagnostic Steps:

- Visit yoursite.com/robots.txt directly in browser

- Check file permissions (should be 644)

- Verify file location in root directory

- Test with different browsers and devices

Solutions:

# Correct file placement /public_html/robots.txt # For most hosting /www/robots.txt # For some configurations /htdocs/robots.txt # Alternative structure

Issue #2: Syntax Errors Causing Misinterpretation

Common Syntax Problems:

# WRONG - Missing colon User-agent * Disallow /admin/ # WRONG - Incorrect spacing User-agent:* Disallow:/admin/ # CORRECT - Proper formatting User-agent: * Disallow: /admin/

Validation Tools:

- Google Search Console robots.txt Tester

- Online robots.txt validators

- Screaming Frog SEO Spider

Issue #3: Accidental Blocking of Important Content

Detection Methods:

- Monitor organic traffic drops in Google Analytics

- Check Google Search Console for crawl errors

- Use site:yoursite.com searches to see indexed pages

- Regular SEO audits with professional tools

Recovery Process:

- Identify the problematic directive

- Update robots.txt file immediately

- Request reindexing in Google Search Console

- Monitor recovery over 2-4 weeks

Performance Impact and ROI Analysis

Measuring Robots.txt Effectiveness

Key Performance Indicators:

- Crawl Budget Efficiency

- Pages crawled per day (from Search Console)

- Important pages indexed vs. total pages

- Crawl error reduction percentage

- SEO Performance Metrics

- Organic traffic growth

- Keyword ranking improvements

- Page indexation rates

- Technical Performance

- Site speed improvements (from reduced crawler load)

- Server resource utilization

- Core Web Vitals scores

Real-World Case Studies

Case Study 1: E-commerce Site Optimization

- Challenge: 2M+ product variations causing crawl budget waste

- Solution: Blocked filtered/sorted pages, allowed canonical versions

- Results: 45% increase in important page indexation, 23% organic traffic growth

Case Study 2: News Website Recovery

- Challenge: Accidentally blocked CSS files, causing rendering issues

- Solution: Updated robots.txt to allow all styling resources

- Results: Core Web Vitals scores improved by 60%, search rankings recovered within 3 weeks

Case Study 3: SaaS Company Lead Generation

- Challenge: Internal tools being indexed instead of marketing content

- Solution: Strategic blocking of app areas, emphasis on content marketing pages

- Results: 38% increase in organic lead generation, improved brand authority

Advanced Debugging and Monitoring

Setting Up Automated Monitoring

Google Search Console Alerts:

- Enable crawl error notifications

- Set up performance monitoring

- Track indexation changes

Third-Party Monitoring Tools:

# Example monitoring script (pseudocode) import requests import time def check_robots_txt(): response = requests.get('https://yoursite.com/robots.txt') if response.status_code != 200: send_alert('Robots.txt not accessible!') if 'Disallow: /' in response.text: send_alert('Site completely blocked!') return response.text # Run check every hour schedule.every().hour.do(check_robots_txt)

Log Analysis for Crawler Behavior

Server Log Insights: Monitor your server logs to understand how different crawlers interact with your robots.txt file:

# Example log analysis commands grep "robots.txt" /var/log/apache2/access.log grep "Googlebot" /var/log/apache2/access.log | grep "robots.txt"

Key Metrics to Track:

- Frequency of robots.txt requests

- User-agents accessing the file

- Response times and errors

- Crawler compliance rates

Enterprise-Level Robots.txt Management

Large Website Considerations

Multi-Domain Strategy:

# Main corporate site https://company.com/robots.txt User-agent: * Disallow: /internal/ Allow: / # Product subdomain https://products.company.com/robots.txt User-agent: * Disallow: /admin/ Allow: /catalog/ # Support subdomain https://support.company.com/robots.txt User-agent: * Allow: / Disallow: /tickets/

Dynamic Robots.txt Generation: For large sites with frequently changing content, consider programmatic robots.txt generation:

<?php // Example dynamic robots.txt generation header('Content-Type: text/plain'); echo "User-agent: *\n"; echo "Disallow: /admin/\n"; echo "Disallow: /private/\n"; // Dynamic sitemap inclusion $sitemaps = glob('/path/to/sitemaps/*.xml'); foreach($sitemaps as $sitemap) { echo "Sitemap: https://yoursite.com/" . basename($sitemap) . "\n"; } ?>

Team Collaboration and Documentation

Robots.txt Documentation Template:

# Robots.txt Documentation # Company: [Company Name] # Last Updated: [Date] # Updated By: [Name/Team] # Version: [Version Number] # BUSINESS RULES: # 1. Block all admin areas for security # 2. Allow all product pages for SEO # 3. Block duplicate content from filters # 4. Maintain crawl budget for important content # CHANGE LOG: # v1.2 - 2025-06-01 - Added new product category allowances # v1.1 - 2025-05-15 - Blocked new admin section # v1.0 - 2025-05-01 - Initial implementation

Future of Robots.txt and Emerging Trends

AI and Machine Learning Impact

Current Developments:

- Google’s AI-powered understanding of website intent

- Improved context recognition beyond simple directives

- Enhanced crawling efficiency through machine learning

Preparing for the Future:

- Focus on semantic clarity in your robots.txt strategy

- Ensure your blocking decisions align with content quality

- Monitor Google’s AI update announcements

Voice Search and Mobile-First Considerations

Voice Search Optimization:

User-agent: * # Ensure voice-search relevant content is accessible Allow: /faq/ Allow: /how-to/ Allow: /local/ # Block non-voice-relevant admin content Disallow: /admin/ Disallow: /dashboard/

Progressive Web App (PWA) Considerations: Modern PWAs require careful robots.txt management to ensure app shell resources are accessible while protecting sensitive functionality.

International Expansion and Robots.txt

Global SEO Strategy:

# Multi-regional approach User-agent: * Allow: /us/ Allow: /uk/ Allow: /ca/ Allow: /au/ # Region-specific restrictions Disallow: /us/internal/ Disallow: /uk/private/ # Localized sitemaps Sitemap: https://site.com/us/sitemap.xml Sitemap: https://site.com/uk/sitemap.xml

Frequently Asked Questions (FAQs)

1. What is robots.txt and why is it important for SEO?

Robots.txt is a text file that tells search engine crawlers which pages or sections of your website they can or cannot access. It’s crucial for SEO because it helps you:

- Control how search engines crawl your site

- Optimize crawl budget by directing bots to important content

- Prevent duplicate content issues

- Protect sensitive areas from being indexed

- Improve overall site performance and search rankings

2. Where should I place my robots.txt file?

Your robots.txt file must be placed in the root directory of your website, accessible at https://yourdomain.com/robots.txt. It cannot be placed in subdirectories or given a different name. Search engines always look for it at this exact location.

3. Can robots.txt improve my search engine rankings?

While robots.txt doesn’t directly improve rankings, it can significantly impact your SEO performance by:

- Preventing crawl budget waste on unimportant pages

- Avoiding duplicate content penalties

- Ensuring crawlers focus on your high-value content

- Improving site performance by reducing server load

- Supporting proper indexation of important pages

Robots.txt: Prevents crawlers from accessing pages entirely. If a page is blocked by robots.txt, search engines won’t crawl it, but it might still appear in search results if linked from other sites.

Noindex meta tags: Allow crawlers to access pages but prevent them from being indexed in search results. The page is crawled but not stored in the search engine’s index.

5. Should I block CSS and JavaScript files in robots.txt?

Never block CSS and JavaScript files that are essential for page rendering. Google needs to access these resources to properly understand and rank your pages. Blocking them can:

- Hurt your Core Web Vitals scores

- Cause pages to appear broken to search engines

- Negatively impact your search rankings

- Create poor user experience signals

6. How do I test my robots.txt file?

Use these methods to test your robots.txt file:

- Google Search Console: Use the robots.txt Tester tool

- Direct access: Visit yoursite.com/robots.txt in a browser

- SEO tools: Use Screaming Frog, SEMrush, or Ahrefs

- Command line: Use curl commands to test accessibility

- Manual verification: Check that blocked pages don’t appear in search results

7. What happens if I don’t have a robots.txt file?

If you don’t have a robots.txt file, search engines will assume they can crawl your entire website. This isn’t necessarily bad for small sites, but larger sites benefit from robots.txt because it:

- Provides control over crawling behavior

- Helps optimize server resources

- Prevents indexation of unwanted content

- Improves crawl efficiency

8. Can robots.txt completely block search engines from my site?

Yes, you can block all search engines with:

User-agent: * Disallow: /

However, this is rarely recommended as it will:

- Remove your site from search results

- Eliminate organic traffic

- Hurt your online visibility

- Only use this for staging/development sites

9. How often should I update my robots.txt file?

Update your robots.txt file when:

- You launch new website sections

- You restructure your site architecture

- You identify crawl budget issues

- You implement new SEO strategies

- Google releases relevant algorithm updates

- You notice crawling problems in Search Console

Regular quarterly reviews are recommended for most websites.

10. What are the most common robots.txt mistakes?

The most frequent mistakes include:

- Blocking CSS/JavaScript files

- Using incorrect syntax (missing colons, wrong spacing)

- Overly broad wildcard usage

- Placing the file in wrong location

- Blocking important pages accidentally

- Not testing changes before implementation

- Forgetting to include sitemap directives

11. Do all search engines respect robots.txt?

Most reputable search engines (Google, Bing, Yahoo) respect robots.txt directives. However:

- It’s not legally binding – just a guideline

- Malicious crawlers may ignore it

- Some SEO tools may not follow restrictions

- Social media crawlers have varying compliance

- Always use additional security measures for truly sensitive content

12. Can robots.txt affect my Core Web Vitals scores?

Yes, robots.txt can impact Core Web Vitals if you:

- Block essential CSS or JavaScript files

- Prevent proper page rendering

- Create layout shift issues

- Slow down page loading times

Always ensure render-critical resources remain accessible to search engines.

13. How does robots.txt work with XML sitemaps?

Robots.txt and XML sitemaps work together perfectly:

- Include sitemap directives in robots.txt to help crawlers find your sitemaps

- Use robots.txt to prevent crawling of low-value pages

- Use sitemaps to promote important pages

- Never block your sitemap files with robots.txt

Example:

User-agent: * Disallow: /admin/ Allow: / Sitemap: https://yoursite.com/sitemap.xml

14. Should e-commerce sites use robots.txt differently?

E-commerce sites have unique robots.txt needs:

- Block filtered/sorted product pages to prevent duplicate content

- Allow important product and category pages

- Block cart, checkout, and account pages

- Prevent indexation of internal search results

- Allow product images and essential resources

- Include product sitemaps

15. What’s the relationship between robots.txt and crawl budget?

Crawl budget is the number of pages search engines will crawl on your site. Robots.txt helps optimize crawl budget by:

- Blocking low-value pages (admin, duplicates, filters)

- Directing crawlers to important content

- Reducing server load and crawl time

- Improving crawling efficiency

- Ensuring important pages get crawled regularly

16. Can I use robots.txt for international SEO?

Yes, robots.txt supports international SEO by:

- Allowing different language versions of pages

- Including localized sitemaps

- Blocking region-specific admin areas

- Coordinating with hreflang implementations

- Managing multi-domain international strategies

17. How do I handle robots.txt for subdirectories vs. subdomains?

Subdirectories (site.com/blog/): Use one robots.txt file in the root directory with specific rules for each subdirectory.

Subdomains (blog.site.com): Each subdomain needs its own robots.txt file at its root level.

18. What should I do if my robots.txt is blocking important pages?

If robots.txt is accidentally blocking important pages:

- Identify the problematic directive immediately

- Update the robots.txt file to allow access

- Test the changes using Google Search Console

- Request re-indexing of affected pages

- Monitor recovery in search results over 2-4 weeks

- Implement monitoring to prevent future issues

19. Can robots.txt help with duplicate content issues?

Yes, robots.txt is excellent for managing duplicate content by:

- Blocking parameter-based duplicate pages

- Preventing indexation of filtered/sorted versions

- Blocking print versions of pages

- Managing pagination issues

- Preventing crawler access to duplicate content patterns

20. How does robots.txt affect mobile SEO?

For mobile SEO, ensure your robots.txt:

- Doesn’t block mobile-specific crawlers (Googlebot-Mobile)

- Allows mobile-essential CSS and JavaScript

- Supports mobile-first indexing requirements

- Doesn’t create different rules for mobile vs. desktop

- Includes mobile sitemaps when relevant

Conclusion: Mastering Robots.txt for SEO Success

Robots.txt might seem like a simple text file, but as we’ve explored throughout this comprehensive guide, it’s one of the most powerful tools in your SEO arsenal. When implemented correctly, it can significantly improve your website’s search engine performance, protect your server resources, and ensure that search engines focus on your most valuable content.

Key Takeaways for Immediate Implementation

Essential Actions:

- Audit your current robots.txt file (or create one if missing)

- Test thoroughly using Google Search Console before implementing changes

- Never block essential CSS/JavaScript files

- Include sitemap directives to help crawlers discover your content

- Monitor performance regularly and adjust as needed

Strategic Considerations:

- Align your robots.txt strategy with your overall SEO goals

- Consider your website type and industry-specific needs

- Plan for international expansion and mobile-first indexing

- Document your strategy for team collaboration

- Stay updated with algorithm changes and best practices

The Path Forward

As search engines continue to evolve with AI and machine learning capabilities, the fundamental principles of robots.txt remain constant: guide crawlers to your best content while protecting sensitive areas and optimizing resource usage. The websites that succeed in search rankings are those that take a strategic, well-planned approach to technical SEO elements like robots.txt.

Remember, robots.txt is not a set-it-and-forget-it solution. It requires ongoing attention, testing, and optimization as your website grows and search engine algorithms evolve. Start with the basics, test thoroughly, and gradually implement more advanced strategies as you become comfortable with the fundamentals.

Next Steps in Your SEO Journey

Now that you understand robots.txt, consider exploring these related technical SEO topics:

- XML Sitemaps: Complement your robots.txt strategy

- Meta Robots Tags: Page-level crawling control

- Canonical Tags: Duplicate content management

- Technical SEO Auditing: Comprehensive site optimization

- Core Web Vitals: Performance optimization for rankings

Ready to implement? Start with our basic template, test it thoroughly, and gradually refine your approach based on your specific needs and performance data.

Hi, I’m Mitu Chowdhary — an SEO enthusiast turned expert who genuinely enjoys helping people understand how search engines work. With this Blog, I focus on making SEO feel less overwhelming and more approachable for anyone who wants to learn — whether you’re just getting started or running a business and trying to grow online.

I write for beginners, small business owners, service providers — anyone curious about how to get found on Google and build a real online presence. My goal is to break down SEO into simple, practical steps that you can actually use, without the confusing technical jargon.

Every post I share comes from a place of wanting to help — not just to explain what SEO is, but to show you how to make it work for you. If something doesn’t make sense, I want this blog to be the place where it finally clicks.

I believe good SEO is about clarity, consistency, and a bit of creativity — and that’s exactly what I try to bring to every article here.

Thanks for stopping by. I hope you find something helpful here!